News

Cloud AI Risk Report Highlights Amazon SageMaker Root Access Exposures

The Amazon Web Services (AWS) platform was featured in a new report from Tenable on cloud AI risks, especially the Amazon SageMaker offering, where root access vulnerabilities were found to be widespread.

To be sure, fellow cloud giants Microsoft (Azure) and Google (Google Cloud Platform) received their fair share of scrutiny in the report, with the analysi finding 70% of cloud workloads using AI services contain unresolved vulnerabilities. However, Amazon SageMaker was associated with one significant finding.

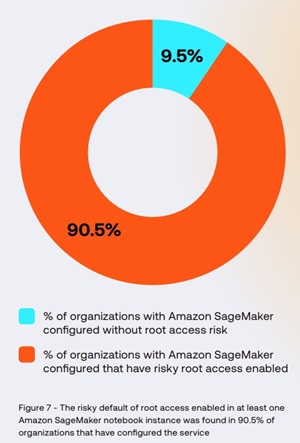

"The vast majority (90.5%) of organizations that have configured Amazon SageMaker have the risky default of root access enabled in at least one notebook instance," said the Cloud AI Risk Report 2025 from exposure management company Tenable.

That is by far the highest percentage data point among the key findings of the report as presented by Tenable:

- Cloud AI workloads aren't immune to vulnerabilities: Approximately 70% of cloud AI workloads contain at least one unremediated vulnerability. In particular, Tenable Research found CVE-2023-38545 -- a critical curl vulnerability -- in 30% of cloud AI workloads.

- Jenga-style cloud misconfigurations exist in managed AI services: 77% of organizations have the overprivileged default Compute Engine service account configured in Google Vertex AI Notebooks. This means all services built on this default Compute Engine are at risk.

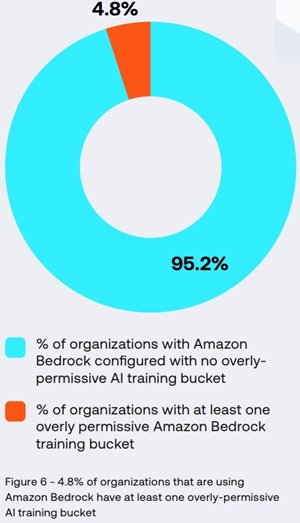

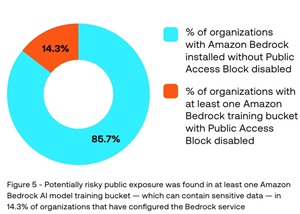

- AI training data is susceptible to data poisoning, threatening to skew model results: 14% of organizations using Amazon Bedrock do not explicitly block public access to at least one AI training bucket and 5% have at least one overly permissive bucket.

- Amazon SageMaker notebook instances grant root access by default: As a result, 91% of Amazon SageMaker users have at least one notebook that, if compromised, could grant unauthorized access, which could result in the potential modification of all files on it.

"By default, when a notebook instance is created, users who log into the notebook instance have root access," the report explained. "Granting root access to Amazon SageMaker notebook instances introduces unnecessary risk by providing users with administrator privileges. With root access, users can edit or delete system-critical files, including those that contribute to the AI model, install unauthorized software and modify essential environment components, increasing the risk if compromised. According to AWS, 'In adherence to the principle of least privilege, it is a recommended security best practice to restrict root access to instance resources to avoid unintentionally over provisioning permissions.'"

[Click on image for larger view.] Amazon SageMaker with Root Access Enabled (source: Tenable).

[Click on image for larger view.] Amazon SageMaker with Root Access Enabled (source: Tenable).

Failing to follow the principle of least privilege increases the risk of unauthorized access, allowing attackers to steal AI models and expose proprietary data. Compromised credentials can also grant access to critical resources like S3 buckets, which may contain training data, pre-trained models, or sensitive information such as PII," said Tenable. The company noted, "The consequences of such breaches are severe."

Along with Amazon SageMaker, which is a fully managed machine learning service provided by AWS that enables developers and data scientists to build, train, and deploy machine learning models at scale, the report also addressed Amazon Bedrock, a fully managed service that enables businesses to build and scale generative AI applications using foundation models from multiple AI providers without needing to manage infrastructure.

The report found that a much smaller percentage of Amazon Bedrock training buckets are overly permissive.

[Click on image for larger view.] Amazon Bedrock Training Buckets Are Overly Permissive (source: Tenable).

[Click on image for larger view.] Amazon Bedrock Training Buckets Are Overly Permissive (source: Tenable).

"A small but important portion (5%) of the organizations we studied that have configured Amazon Bedrock have at least one overly-permissive training bucket," the report said. "Overly-permissive storage buckets are a familiar cloud misconfiguration; in AI environments such risks are amplified if the buckets contain sensitive data used to train or fine-tune AI models. If improperly secured, the overly-permissive buckets can be compromised by attackers to modify data, steal confidential information or disrupt the training process."

The report also called out Amazon Bedrock training buckets that did not have public access blocked.

[Click on image for larger view.] Amazon SageMaker with Root Access Enabled (source: Tenable).

[Click on image for larger view.] Amazon SageMaker with Root Access Enabled (source: Tenable).

"Among the organizations that have configured Amazon Bedrock training buckets, 14.3% have at least one bucket that does not have Amazon S3 Block Public Access enabled," the report said. "The Amazon S3 Block Public Access feature, considered a best practice for securely configuring sensitive S3 buckets, is designed to prevent unauthorized access and accidental data exposure. However, we identified instances in which Amazon Bedrock training buckets lacked this protection -- a configuration that increases the risk of unintentional excessive exposure. Such oversights can leave sensitive data vulnerable to tampering and leakage -- a risk that is even more concerning for AI training data, as data poisoning is highlighted as a top security issue in the OWASP Top 10 threats for machine learning systems."

Tenable Cloud Research created the new report by analyzing the telemetry gathered from workloads across diverse public cloud and enterprise landscapes, scanned through Tenable products (Tenable Cloud Security, Tenable Nessus), the company said. The data were collected between December 2022 and November 2024, consisting of:

- Cloud asset and configuration information

- Real-world workloads in active production

- Data from AWS, Azure and GCP environments

"When we talk about AI usage in the cloud, more than sensitive data is on the line. If a threat actor manipulates the data or AI model, there can be catastrophic long-term consequences, such as compromised data integrity, compromised security of critical systems and degradation of customer trust," said Liat Hayun, VP of Research and Product Management, Cloud Security, Tenable, in a March 19 news release. "Cloud security measures must evolve to meet the new challenges of AI and find the delicate balance between protecting against complex attacks on AI data and enabling organizations to achieve responsible AI innovation."

About the Author

David Ramel is an editor and writer at Converge 360.