News

AWS Shares Networking Guidance for AI Model Fine-Tuning

Custom-trained AI models are a good option for organizations looking for ways to tap the latest innovations in AI but reluctant to fully commit to off-the-shelf AI models like OpenAI's GPT.

Increasingly, organization are able to train existing AI models on their proprietary data in a process called fine-tuning. Models that are fine-tuned are able to return outputs that are more contextually relevant than general-purpose models. LLM providers like Amazon Web Services (AWS) and Microsoft have updated their model libraries to include fine-tuning capabilities.

However, for organizations with large volumes of data, fine-tuning a generative AI model can be demanding on their networks. In a blog post this week titled "Networking Best Practices for Generative AI on AWS," AWS' Hernan Terrizzano and Marcos Boaglio noted that "networking plays a critical role in all phases of the generative Al workflow: data collection, training, and deployment."

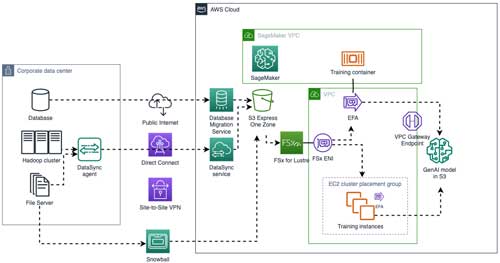

To help organizations ensure their networks are up to the task of fine-tuning AI models, they shared some guidance for architects (while promoting AWS' own solutions, of course). Below is a sample architecture they shared as reference:

[Click on image for larger view.]

Sample AWS architecture for AI model fine-tuning. (Source: AWS)

[Click on image for larger view.]

Sample AWS architecture for AI model fine-tuning. (Source: AWS)

Some other highlights from their post:

Store your data in the cloud region where you plan to train your AI model: "Avoid the pitfall of accessing on-premises data sources such as shared file systems and Hadoop clusters directly from compute nodes in AWS," Terrizzano and Boaglio advised. "Protocols like Network File System (NFS) and Hadoop Distributed File System (HDFS) are not designed to work over wide-area networks (WANs), and throughput will be low."

For cloud data transfers, consider options besides the public Internet: "Using the public internet only requires an internet connection at the on-premises data center but is subject to internet weather. Site-to-Site VPN connections provide a fast and convenient method for connecting to AWS but are limited to 1.25 gigabits per second (Gbps) per tunnel. AWS Direct Connect delivers fast and secure data transfer for sensitive training data sets. It bypasses the public internet and creates a private, physical connection between your network and the AWS global network backbone."

If bandwidth or time is an issue, take it offline: "For larger datasets or sites with connectivity challenges, the AWS Snow Family provides offline data copy functionality. For example, moving 1 Petabyte (PB) of data using a 1 Gbps link can take 4 months, but the same transfer can be made in a matter of days using five AWS Snowball edge storage optimized devices."

You can combine data transfer approaches: "Initial training data can be transferred offline, after which incremental updates are sent on a continuous or regular basis," they said. "For example, after an initial transfer using the AWS Snow Family, AWS Database Migration Service (AWS DMS) ongoing replication can be used to capture changes in a database and send them to Amazon S3."

There's a self-managed training option for organizations with specific data privacy and security burdens: "For enterprises that want to manage their own training infrastructure, Amazon EC2 UltraClusters consist of thousands of accelerated EC2 instances that are colocated in a given Availability Zone and interconnected using EFA networking in a petabit-scale nonblocking network."

There's a managed option, too: "SageMaker HyperPod drastically reduces the complexities of ML infrastructure management. It offers automated management of the underlying infrastructure, allowing uninterrupted training sessions even in the event of hardware failures."