News

With P3 Instances, AWS Pushes Bounds of GPU Computing

Just over a month after launching its highest-capacity EC2 instance, Amazon Web Services (AWS) last week hit another milestone with the debut of its latest GPU-optimized instance family, the P3.

Available in three different configurations out of AWS' Northern Virginia, Oregon, Ireland and Tokyo regions, the new P3 instances are designed for very compute-intensive and advanced workloads. Examples of use cases include machine learning, genomics, high-performance computing (HPC), cryptography, molecular modeling and financial analytics.

The P3 instances run on Nvidia's new Tesla V100 GPUs, which are based on the chip maker's Volta technology. According to Nvidia, each Volta GPU has the performance capability of 100 CPUs, making it especially suited for complex AI and deep learning workloads.

With the new P3 instances, AWS is promising up to 14 times the processing power of its P2 instance family, which run on the older Tesla K80 GPUs.

As AWS evangelist Jeff Barr explained in a blog post, "Each of the NVIDIA GPUs is packed with 5,120 CUDA cores and another 640 Tensor cores and can deliver up to 125 TFLOPS of mixed-precision floating point, 15.7 TFLOPS of single-precision floating point, and 7.8 TFLOPS of double-precision floating point. On the two larger sizes, the GPUs are connected together via NVIDIA NVLink 2.0 running at a total data rate of up to 300 GBps. This allows the GPUs to exchange intermediate results and other data at high speed, without having to move it through the CPU or the PCI-Express fabric."

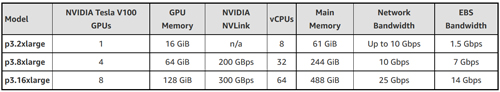

The three P3 instance configurations are described in the table below:

[Click on image for larger view.] Source: AWS/Jeff Barr

[Click on image for larger view.] Source: AWS/Jeff Barr

The largest configuration, the p3.x16xlarge, is 781,000 times faster at performing vector operations than the Cray-1 supercomputer, according to Barr, though that system is over four decades old. Comparisons to modern supercomputers are more elusive to pin down, he said, "given that you can think of the P3 as a step-and-repeat component of a supercomputer that you can launch on as as-needed basis."

Incidentally, AWS cloud rival Microsoft recently announced a partnership with Cray to bring the latter's supercomputing capabilities to Microsoft's Azure cloud platform.

In a separate announcement last week, Nvidia said that P3 instance users will be able to take advantage of the Nvidia GPU Cloud (NGC) container registry for AI developers. According to Nvidia, the NGC is designed to enable "deep learning development through no-cost access to a comprehensive, easy-to-use, fully optimized deep learning software stack."

More information on the NGC is available here.