News

AWS Uses Advanced LLMs to Simplify Agentic AI with Open Source Strands SDK

Amazon Web Services (AWS) introduced the open-source Strands Agents SDK, which uses large language models (LLMs) to automate the creation and operation of AI agents, reducing the need for manual workflow coding.

Strands Agents on GitHub lets developers create AI agents by defining a prompt and a set of tools, while the model handles task planning and tool invocation. It supports multiple LLM providers -- including Amazon Bedrock, Anthropic, Meta, and Ollama -- and includes many ready-made tools for tasks like file handling, API calls, and AWS service integration. This design simplifies agent development and accelerates the path from prototype to production, the company indicated.

This wasn't possible with earlier LLMs that were mostly used for chatting, not reasoning or using tools. With the advanced capabilities of newer LLMs, AWS devs recognized that they can now perform reasoning, planning, and tool invocation autonomously.

AWS's Clare Liguori, who works on the Amazon Q Developer AI-powered coding assistant, noted in a May 16 blog post that the idea for Strands emerged from the team noticing how LLMs have advanced, also citing a 2023 scientific paper that documented improvements in LLMs' abilities to reason and use tools to complete tasks.

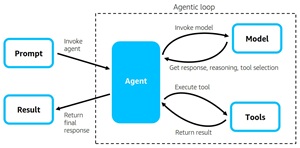

[Click on image for larger view.] The Agentic Loop (source: AWS).

[Click on image for larger view.] The Agentic Loop (source: AWS).

"We realized that we no longer needed such complex orchestration to build agents, because models now have native tool-use and reasoning capabilities," she said. "In fact, some of the agent framework libraries we had been using to build our agents started to get in our way of fully leveraging the capabilities of newer LLMs. Even though LLMs were getting dramatically better, those improvements didn't mean we could build and iterate on agents any faster with the frameworks we were using. It still took us months to make an agent production-ready."

So Strands Agents came to being as the Q Developer team sought to simplify agent development by leveraging advanced LLM capabilities rather than complex orchestration logic, significantly improving user experiences and reducing deployment times from months to just days or weeks. As for the name: "Like the two strands of DNA, Strands connects two core pieces of the agent together: the model and the tools."

The post explains how devs define an agent with the SDK by defining three components in code:

- Model: Strands offers flexible model support. You can use any model in Amazon Bedrock that supports tool use and streaming, a model from Anthropic's Claude model family through the Anthropic API, a model from the Llama model family via Llama API, Ollama for local development, and many other model providers such as OpenAI through LiteLLM. You can additionally define your own custom model provider with Strands.

- Tools: You can choose from thousands of published Model Context Protocol (MCP) servers to use as tools for your agent. Strands also provides 20+ pre-built example tools, including tools for manipulating files, making API requests, and interacting with AWS APIs. You can easily use any Python function as a tool by simply using the Strands

@tool decorator.

- Prompt: You provide a natural language prompt that defines the task for your agent, such as answering a question from an end user. You can also provide a system prompt that provides general instructions and desired behavior for the agent.

The post also goes on to detail various deployment architectures for Strands Agents, including local client-based deployment, API-driven setups via AWS Lambda, Fargate, or EC2, isolated backend environments, and hybrid deployments mixing local and backend-hosted tools. Additionally, it highlights Strands' built-in support for observability through OpenTelemetry, enabling distributed tracing and performance monitoring in production environments.

AI tells us that Strands Agents joins several similar open-source projects for simplifying and enhancing the development of AI agent systems, providing frameworks for advanced model integration, agent orchestration, and flexible deployment. These include:

- OpenAI Agents SDK: Lightweight Python framework for building agents using OpenAI's models.

- LangChain / LangGraph: Popular toolkit for creating complex, chain-based and multi-agent LLM applications.

- AutoGen (Microsoft): Framework for building multi-agent conversational systems with customizable interactions.

- CrewAI: Multi-agent collaboration framework emphasizing clearly defined roles and team workflows.

- ModelScope-Agent: Agent framework providing flexible integration with various open-source LLMs.

For more information on the Strands Agents SDK, consult its documentation, which details those pre-built example tools mentioned above, including offerings for:

- RAG & Memory

- File Operations

- Shell & System

- Code Interpretation

- Web & Network

- Multi-modal

- AWS Services

- Utilities

- Agents & Workflows

About the Author

David Ramel is an editor and writer at Converge 360.