News

Brand-New DeepSeek AI on AWS: When to Use Bedrock or SageMaker

Amazon has embraced controversial new DeepSeek AI tech from a Chinese startup despite concerns about data security, privacy, compliance, and national security risks that have caused many organizations to restrict its usage in the workforce.

This week, Amazon announced that it has integrated DeepSeek AI into its SageMaker and Bedrock platforms in its AWS cloud. They are used by data scientists and developers to build, train, and deploy machine learning models. The company is not alone in this, as all three cloud giants, including Microsoft and Google, have also integrated DeepSeek AI into their platforms. Judging from announcements and related documentation, however, Google's DeepSeek initiatives are more limited than those of Amazon and Microsoft (see "Cloud Giants Offer DeepSeek AI, Restricted by Many Orgs, to Devs").

Amazon first announced its DeepSeek AI integration in a LinkedIn post by AWS CEO Matt Garman:

DeepSeek R1 is the latest foundation model to capture the imagination of the industry. We've always been focused on making it easy to get started with emerging and popular models right away, and we're giving customers a lot of ways to test out DeepSeek AI.

Today, customers can run the distilled Llama and Qwen DeepSeek models on Amazon SageMaker AI, use the distilled Llama models on Amazon Bedrock with Custom Model Import, or train DeepSeek models with SageMaker via Hugging Face.

We've always believed that no single model is right for every use case, and customers can expect all kinds of new options to emerge in the future.

That's why Amazon Bedrock and Amazon SageMaker AI make it seamless for customers to test and evaluate the latest models as they emerge and pick the best ones based on their unique needs.

That was followed up by a Jan. 30 post, "DeepSeek-R1 models now available on AWS," along with "DeepSeek-R1 model now available in Amazon Bedrock Marketplace and Amazon SageMaker JumpStart."

"DeepSeek launched DeepSeek-V3 on December 2024 and subsequently released DeepSeek-R1, DeepSeek-R1-Zero with 671 billion parameters, and DeepSeek-R1-Distill models ranging from 1.5-70 billion parameters on Jan. 20, 2025," said Channy Yun, a principal developer advocate, in that first post. "They added their vision-based Janus-Pro-7B model on Jan. 27, 2025. The models are publicly available and are reportedly 90-95% more affordable and cost-effective than comparable models. Per DeepSeek, their model stands out for its reasoning capabilities, achieved through innovative training techniques such as reinforcement learning."

The second post notes that "Because DeepSeek-R1 is an emerging model, we recommend deploying this model with guardrails in place," and explains how to use Amazon Bedrock Guardrails to introduce safeguards, prevent harmful content, and evaluate models against key safety criteria.

Along with emphasizing safety, AWS advised as to which offering to use for which use cases.

First, to summarize each:

-

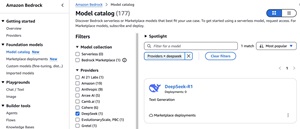

Amazon Bedrock is a fully managed service that simplifies the process of building and scaling generative AI applications. It provides access to a variety of high-performing foundation models (FMs) from leading AI companies through a single API1. Users can experiment with and evaluate these models, customize them with their own data using techniques like fine-tuning and Retrieval Augmented Generation (RAG), and build agents that perform tasks using enterprise systems and data sources. Since it's serverless, there's no need to manage infrastructure, making it easy to integrate and deploy generative AI capabilities into applications using familiar AWS services.

[Click on image for larger view.] DeepSeek in Bedrock (source: AWS).

[Click on image for larger view.] DeepSeek in Bedrock (source: AWS).

-

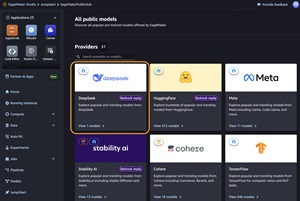

Amazon SageMaker is a fully managed service that helps data scientists and developers build, train, and deploy machine learning models at scale. It simplifies the machine learning process by providing tools for every step of the workflow, from data preparation to model deployment. SageMaker includes integrated Jupyter notebooks for data exploration and preprocessing, built-in algorithms optimized for speed and accuracy, automatic model tuning to improve performance, and one-click deployment for seamless scaling. It also supports both supervised and unsupervised learning and provides a secure, managed environment for training and inferencing models. Essentially, SageMaker streamlines the entire machine learning lifecycle, making it easier and faster to develop high-quality models.

[Click on image for larger view.] DeepSeek in SageMaker (source: AWS).

[Click on image for larger view.] DeepSeek in SageMaker (source: AWS).

Bedrock or SageMaker?

"Amazon Bedrock is best for teams seeking to quickly integrate pre-trained foundation models through APIs," Yun said. "Amazon SageMaker AI is ideal for organizations that want advanced customization, training, and deployment, with access to the underlying infrastructure."

Yun detailed all the ins and outs of using each service, again emphasizing the utility of Amazon Bedrock Guardrails, which developers can use to independently evaluate user inputs and model outputs, being able to control the interaction between users and DeepSeek-R1 with a defined set of policies via filtering undesirable and harmful content in GenAI applications.

However, he noted, "The DeepSeek-R1 model in Amazon Bedrock Marketplace can only be used with Bedrock's ApplyGuardrail API to evaluate user inputs and model responses for custom and third-party FMs available outside of Amazon Bedrock."

He also explained using DeepSeek-R1 with Amazon SageMaker JumpStart, described as a ML hub featuring foundational models (FMs), built-in algorithms, and prebuilt ML solutions that can be deployed quickly.

And again, those safety guardrails come into play.

"As like Bedrock Marketplace, you can use the ApplyGuardrail API in the SageMaker JumpStart to decouple safeguards for your generative AI applications from the DeepSeek-R1 model," he said. "You can now use guardrails without invoking FMs, which opens the door to more integration of standardized and thoroughly tested enterprise safeguards to your application flow regardless of the models used."

Other Options

Along with the Bedrock and SageMaker guidance, Yun noted alternatives.

- Amazon Bedrock Custom Model Import for the DeepSeek-R1-Distill models: This helps users bring in and utilize customized models alongside existing FMs through a single serverless API, eliminating the need for infrastructure management. Users can import DeepSeek-R1-Distill Llama models ranging from 1.5 to 70 billion parameters. The distillation process involves training smaller, more efficient models to replicate the behavior and reasoning patterns of the larger DeepSeek-R1 model with 671 billion parameters, using it as a teacher model.

- Amazon EC2 Trn1 instances for the DeepSeek-R1-Distill models: AWS Deep Learning AMIs (DLAMI) offer tailored machine images for deep learning across various Amazon EC2 instances, from small CPU-only setups to powerful multi-GPU configurations. Deploying DeepSeek-R1-Distill models on AWS Trainium or AWS Inferentia instances provides optimal price-performance.

Read the posts for further information on pricing, data security and more.

About the Author

David Ramel is an editor and writer at Converge 360.